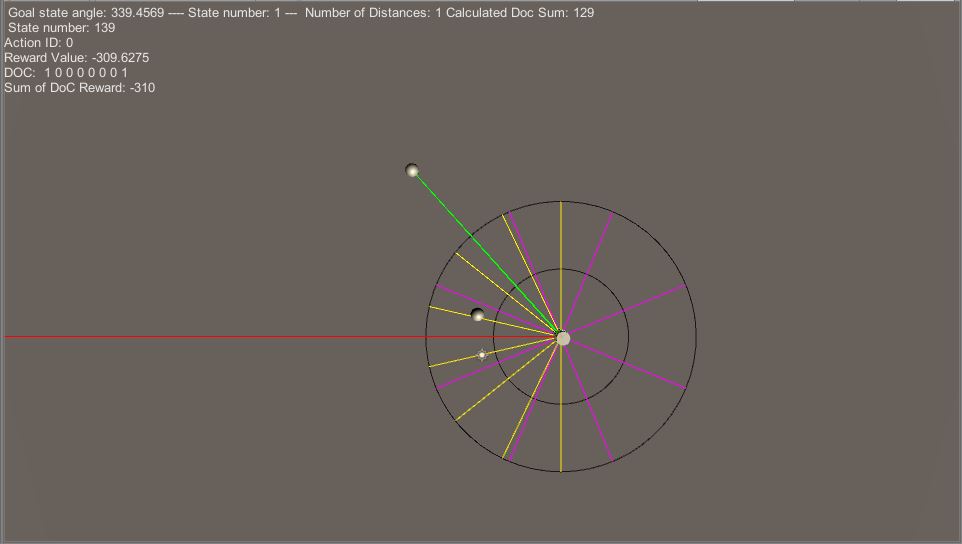

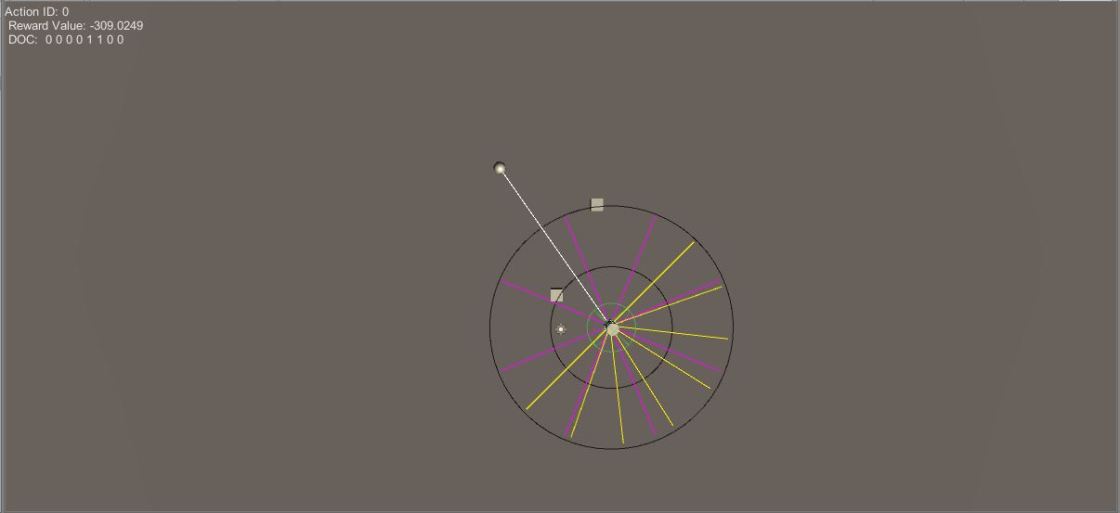

I managed to train an agent to make it reach its goal. My error was in the reward calculation. Unity gives the angle between two vectors as degrees but cosine is taking radian as input and returns radian. I fixed this issue and the result is way better.

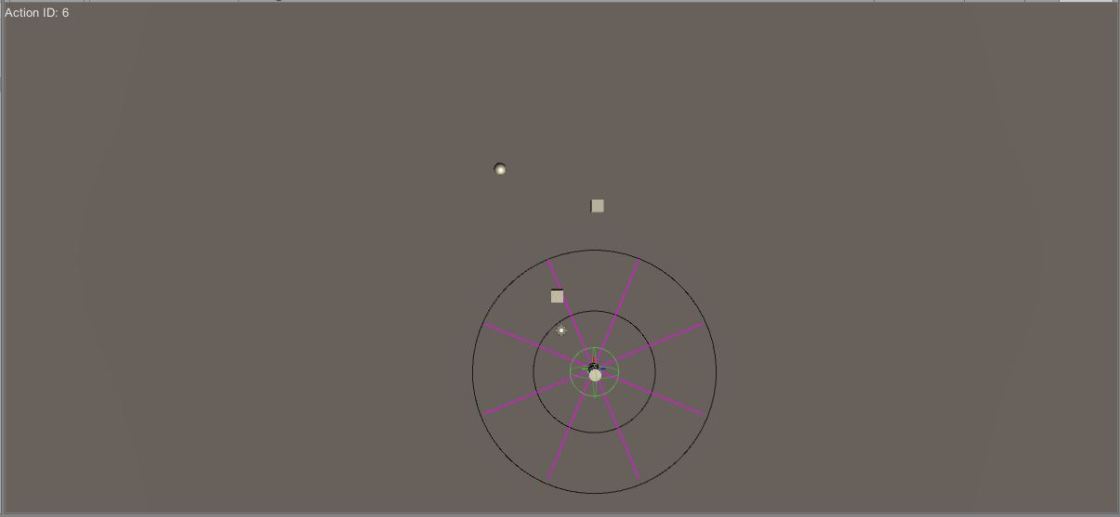

Then I tried it with obstacles. This provided a stranger result. I am not sure why this is the case but the agent, for some reason, goes towards the obstacles but it still reaches its goal regardless.

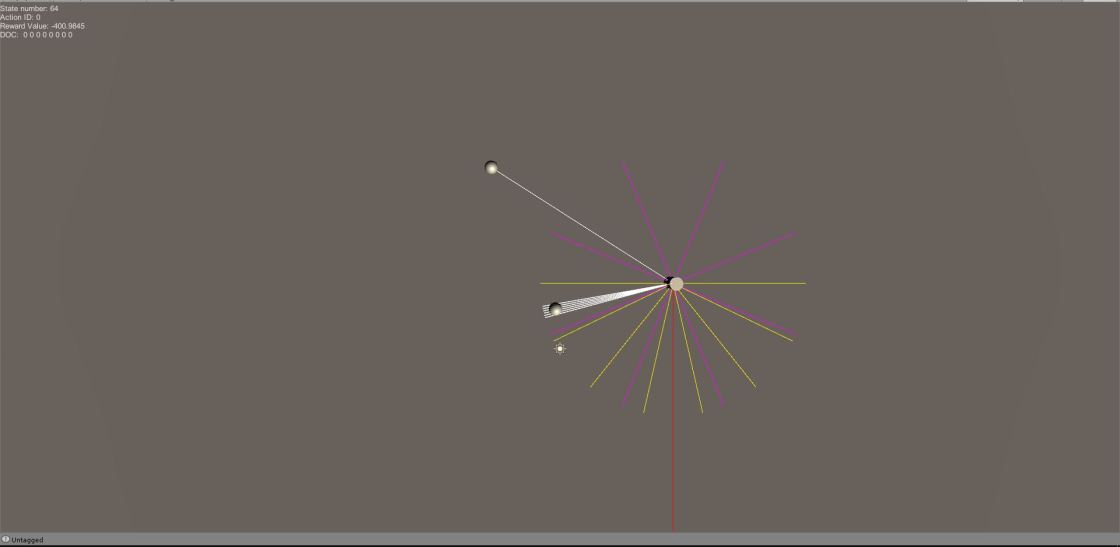

Here is another test with a better collision avoidance. I am not sure yet why it provides better results from time to time.

Now I will try this with different scenarios and try to save the Q-Table in some way (maybe a text file)